The use of facial recognition technology is rapidly gaining momentum. Already integrated in many aspects of our day-to-day lives, it is one of the most well-known and widely used applications of machine learning. Capable of comparing and matching a person’s face from a digital image or live feed against a database of collected faces, the technology works by detecting and measuring facial features from the given image to identify a specific person, often with a very high success rate.

Ostensibly, facial recognition technology is a force for good. An emerging use of it is with phone unlocking, where users can simply point their phone’s camera at their face and almost instantaneously the phone will unlock, provided it is the correct face of course. This has become even more advanced throughout the last few years. For instance, Apple’s Face ID is able to work in the dark using an infrared “flash” that can be picked up by the camera in order to read the person’s face. Whilst being efficient and hands-free, it also highlights the significant strides in lock security.

Another popular application of face recognition is for ID verification. For instance, hospitals in Japan are using face recognition technology to scan patients’ faces when they come in, after which they can then bring up their medical records, let them sign in or book an appointment, among other things. Check out this video to see it in practical application! By 2024, the global facial recognition market could generate $7 billion in revenue. Commercial use of the technology is growing at an increasing rate, with a plethora of business opportunities, including smarter advertising based on who is looking at a screen while at a gas station or recognizing VIPs at large events. Furthermore, it is increasingly being employed to detect missing people. In India in 2018, for example, police traced 3000 missing children in just 4 days using facial recognition technology. Conversely, the technology can be adopted to detect intruders or wanted suspects; Taylor Swift used it to prevent known stalkers from attending one of her concerts in Los Angeles 2018.

Face Detection on the VIA VAB-950

We have implemented face detection on the VIA VAB-950 using Google’s ML Kit. The face detection API allows users to detect faces from a live or static image, identify key facial features (such as ears, eyes, nose, and mouth), and get contours of the detected faces. Check out the github here in order to download it for yourself. This is alongside the CameraX API, and the physical camera used is the MIPI CSI-2 Camera.

There are three main classes we will look at today: “CameraXLivePreviewActivity.java”, “FaceDetectorProcessor.java” and “FaceGraphic.java”, with “FaceDetectorProcessor.java” arguably being the most important, as this is where the main logic of taking the camera preview and running it through a FaceDetector object happens.

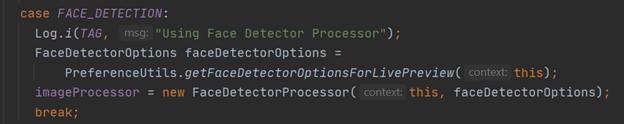

First up, “CameraXLivePreviewActivity.java” is where the CameraX use cases (Preview and Analysis) are set up. Essentially, this provides a bridge between the live feed coming from the camera and the main logic of the program, the main methods here being “bindAnalysisUseCase” and “bindPreviewUseCase”. “bindPreviewUseCase”, as the name would suggest, provides the back-end for the image preview that the user will see on their screen. “bindAnalysisUseCase” acts as the bridge. First, it sets the options for the face detector to the current options selected from within the FaceDetection API, and sets the imageProcessor as a new instance of a FaceDetectorProcessor with these options selected.

Then, depending on which imageProcessor is selected, the code will process the input image from the Preview (which happens to be an ImageProxy):

The two use case methods discussed above are wrapped together by the “bindAllCameraUseCases” method.

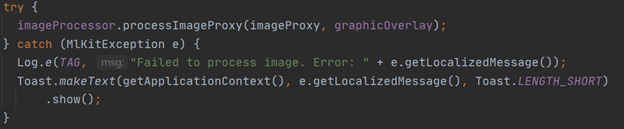

Next is the “FaceDetectorProcessor.java” class, which extends the “VisionProcessorBase.java” bass class. Here, an instance of a FaceDetector object from the face detector API is created, then the method “.process” is used on the image being fed into it.

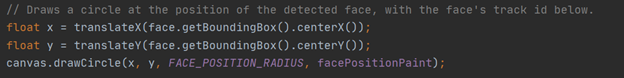

Finally, we have “FaceGraphic.java”. Literally, this object contains the methods for drawing boxes, lines, and labels onto the image preview for the user to see. The main method used in this class is “draw”, which takes a Canvas object as an input, where the canvas is the live feed from the camera. Firstly, a circle is drawn around the position of the face:

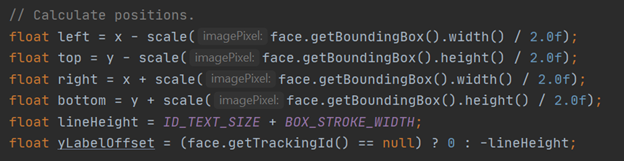

It then calculates exact positions of the face:

Heights and widths of label boxes are then calculated and drawn:

// Calculate width and height of label box

float textWidth = idPaints[colorID].measureText("ID: " + face.getTrackingId());

if (face.getSmilingProbability() != null) {

yLabelOffset -= lineHeight;

textWidth =

Math.max(

textWidth,

idPaints[colorID].measureText(

String.format(Locale.US, "Happiness: %.2f", face.getSmilingProbability())));

}

if (face.getLeftEyeOpenProbability() != null) {

yLabelOffset -= lineHeight;

textWidth =

Math.max(

textWidth,

idPaints[colorID].measureText(

String.format(

Locale.US, "Left eye open: %.2f", face.getLeftEyeOpenProbability())));

}

if (face.getRightEyeOpenProbability() != null) {

yLabelOffset -= lineHeight;

textWidth =

Math.max(

textWidth,

idPaints[colorID].measureText(

String.format(

Locale.US, "Right eye open: %.2f", face.getRightEyeOpenProbability())));

}

yLabelOffset = yLabelOffset - 3 * lineHeight;

textWidth =

Math.max(

textWidth,

idPaints[colorID].measureText(

String.format(Locale.US, "EulerX: %.2f", face.getHeadEulerAngleX())));

textWidth =

Math.max(

textWidth,

idPaints[colorID].measureText(

String.format(Locale.US, "EulerY: %.2f", face.getHeadEulerAngleY())));

textWidth =

Math.max(

textWidth,

idPaints[colorID].measureText(

String.format(Locale.US, "EulerZ: %.2f", face.getHeadEulerAngleZ())));

// Draw labels

canvas.drawRect(

left - BOX_STROKE_WIDTH,

top + yLabelOffset,

left + textWidth + (2 * BOX_STROKE_WIDTH),

top,

labelPaints[colorID]);

yLabelOffset += ID_TEXT_SIZE;

canvas.drawRect(left, top, right, bottom, boxPaints[colorID]);

if (face.getTrackingId() != null) {

canvas.drawText("ID: " + face.getTrackingId(), left, top + yLabelOffset, idPaints[colorID]);

yLabelOffset += lineHeight;

}

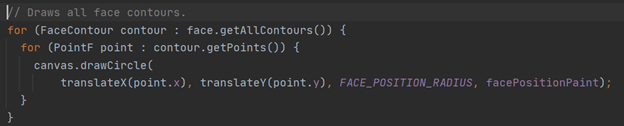

A for-each loop is used to draw the face contours:

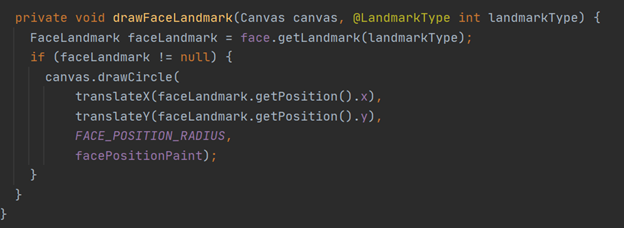

There is also another small private method to draw face landmarks:

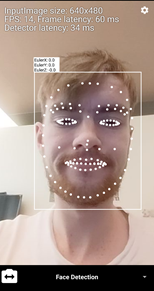

That’s about it! Now you should be able to just run your program and test out the results. Here is what the final preview should look like:

Visit our website’s blog section to find all the latest news and tutorials on the VIA VAB-950!