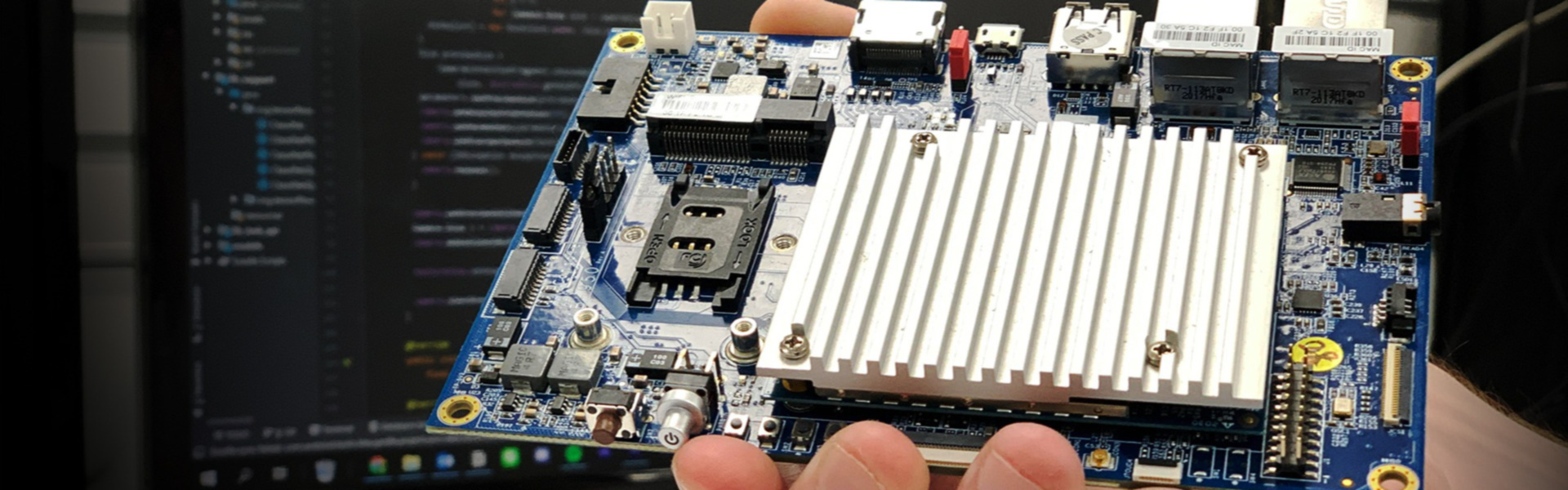

The VIA VAB-950 is VIA’s latest embedded board, yielding a host of brand new features. Today we will highlight its role within Android application development, specifically regarding computer vision and machine learning, by detailing the process of creating a simple object detection app. The app is written in Java, along with Google’s CameraX and ML Kit API, which allows us to implement a camera and load a custom tflite model in our app.

A Brief Introduction to Android, CameraX API, and ML Kit API

Within Android app development, the coding environment is split into two areas: the “Activities” and the “Layouts”. “The Activities” are what define the main logic of the app; how different components work to make the app function as expected. The “Layouts”, as the name suggests, composes of “Views” that make up the app’s final design. For this tutorial, the IDE used is Android Studio, which automatically generates a MainActivity.java and an activity_main.xml file, which are the two main files we work with.

The CameraX API is the latest camera API from Google, building off its predecessor, Camera2. This enables a much simpler camera app development process, featuring “use cases”. These use cases make development using the API fundamentally more user-friendly; syntactically they help keep our code clean, structured and organized. The two main use cases used for this app are “preview” and “image analysis”. There is also another generally prevalent use case, “image capture”, but since this app works with a live video stream, image capturing is not strictly necessary.

Finally, the ML Kit API allows developers to deploy machine-learning apps efficiently in conjunction with CameraX. This tutorial covers loading a custom object classification tflite model into our app, however the app can also serve as a foundational template for implementing any machine learning ideas or models you may have in the future.

Setup

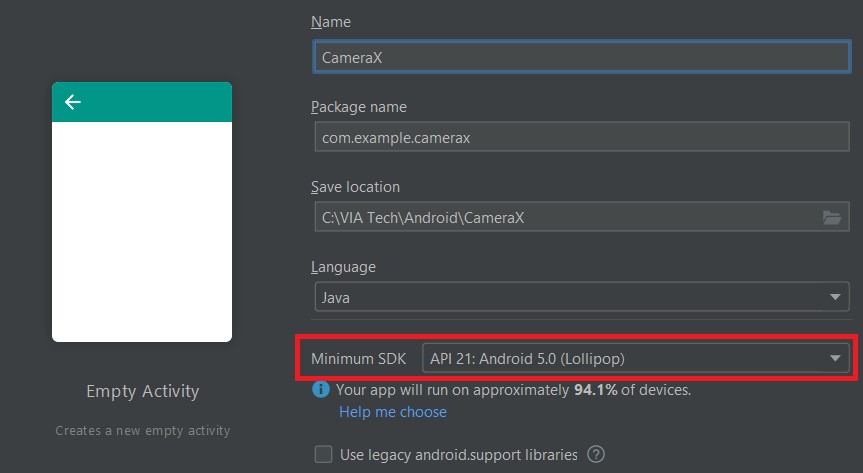

Before beginning our app’s development, we must set up our Android environment by downloading the Java Development Kit (JDK) and Android Studio (click here for a useful tutorial on installing android studio for Windows, and here for Mac). When creating a new project, make sure to set the minimum SDK to API 21; this is the minimum SDK supported by the CameraX API.

Next, we need to modify the code within our generated “build.gradle” and “main_activity.xml” files, by implementing some set up for the APIs used. In your module-level build.gradle file, add these lines of code under the android and dependencies section:

android {

aaptOptions {

noCompress "tflite"

}

}

dependencies {

// CameraX API

def camerax_version = '1.1.0-alpha02'

implementation "androidx.camera:camera-camera2:${camerax_version}"

implementation "androidx.camera:camera-view:1.0.0-alpha21"

implementation "androidx.camera:camera-lifecycle:${camerax_version}"

// Object detection & tracking feature with custom model from ML Kit API

implementation 'com.google.mlkit:object-detection-custom:16.3.1'

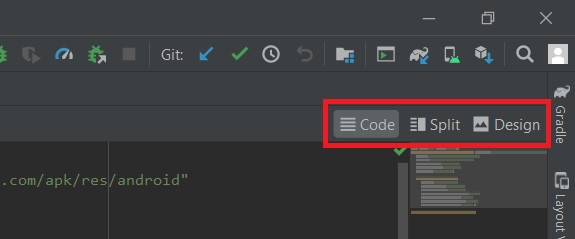

}Next, we need to add code to our activity_main.xml file (they will go inside the pre-existing “ConstraintLayout” section). To do this, when we click open your XML file, we will see three options near the top right: “Code”, “Split”, and “Design”:

Select “Code” and incorporate the following code:

<androidx.constraintlayout.widget.ConstraintLayout xmlns:android="http://schemas.android.com/apk/res/android"

xmlns:app="http://schemas.android.com/apk/res-auto"

xmlns:tools="http://schemas.android.com/tools"

android:layout_width="match_parent"

android:layout_height="match_parent"

tools:context=".MainActivity">

<androidx.camera.view.PreviewView

android:id="@+id/previewView"

android:layout_width="match_parent"

android:layout_height="584dp"

app:layout_constraintEnd_toEndOf="parent"

app:layout_constraintStart_toStartOf="parent"

app:layout_constraintTop_toTopOf="parent">

</androidx.camera.view.PreviewView>

<TextView

android:id="@+id/resultText"

android:layout_width="wrap_content"

android:layout_height="wrap_content"

android:layout_marginTop="32dp"

android:textSize="24sp"

app:layout_constraintEnd_toEndOf="parent"

app:layout_constraintStart_toStartOf="parent"

app:layout_constraintTop_toBottomOf="@+id/previewView"

app:layout_constraintHorizontal_bias="0.498" />

<TextView

android:id="@+id/confidence"

android:layout_width="wrap_content"

android:layout_height="wrap_content"

android:textSize="24sp"

app:layout_constraintBottom_toBottomOf="parent"

app:layout_constraintEnd_toEndOf="parent"

app:layout_constraintStart_toStartOf="parent"

app:layout_constraintTop_toBottomOf="@+id/resultText"

app:layout_constraintHorizontal_bias="0.498"

app:layout_constraintVertical_bias="0.659" />

</androidx.constraintlayout.widget.ConstraintLayout>A “PreviewView” is what CameraX provides to allow our app to display footage from a connected camera as a “preview”. A “ConstraintLayout” (the default layout in our XML file’s Component Tree) allows the developer to constraint certain “Views” in the same place regardless of which Android device is used. A “TextView” are just Views that display text in an app. In our app, the two TextViews will help us display the results of our machine learning inference.

As for the VAB-950, we just need is a micro-USB to USB-A cable and a CSI camera.

Code Implementation

The first challenge is connecting the XML and MainActivity files together. To facilitate this, there is a fundamental method in Android called “findViewById”. As its name suggests, it allows us to instantiate a View from our XML file as an object. In our case, the start of our code should look like this:

public class MainActivity extends AppCompatActivity {

private PreviewView previewView;

private final int REQUEST_CODE_PERMISSIONS = 101;

private final String[] REQUIRED_PERMISSIONS = new String[]{"android.permission.CAMERA"};

private final static String TAG = "Anything unique";

private Executor executor;

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_main);

setRequestedOrientation(ActivityInfo.SCREEN_ORIENTATION_PORTRAIT);

getSupportActionBar().hide();

previewView = findViewById(R.id.previewView);

executor = ContextCompat.getMainExecutor(this);

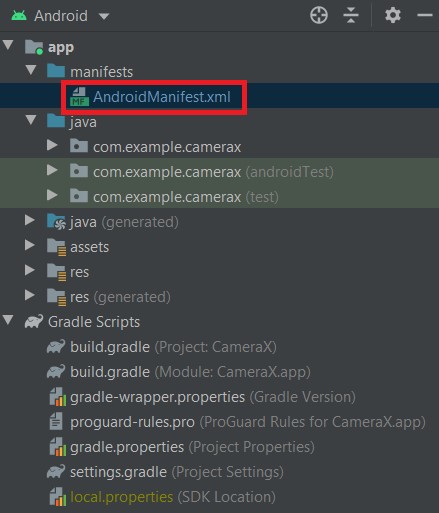

}If we go into the XML file and click “previewView”, we will see in the top right its id is “previewView”. This is what we use to instantiate the PreviewView by way of the R.id.previewView method. If you have used an Android device before, you may recall being asked to give the apps you use certain permissions to do certain tasks. The same thing needs to be done here, where we have to ask the user for camera permission as well as preventing the app running if this permission is not given. Firstly, we need to open the AndroidManifest.xml file:

Add the following two lines before the application section:

<uses-feature android:name="android.hardware.camera.any" /><uses-permission android:name="android.permission.CAMERA" />Then, add these lines into MainActivity.java:

public class MainActivity extends AppCompatActivity {

@Override

protected void onCreate(Bundle savedInstanceState) {

if (allPermissionsGranted()) {

startCamera();

} else {

ActivityCompat.requestPermissions(this,

REQUIRED_PERMISSIONS,

REQUEST_CODE_PERMISSIONS);

}

}

@Override

public void onRequestPermissionsResult(int requestCode, @NonNull String[] permissions,

@NonNull int[] grantResults) {

super.onRequestPermissionsResult(requestCode, permissions, grantResults);

if (allPermissionsGranted()) {

startCamera();

} else {

Toast.makeText(this, "Permissions not granted by the user.",

Toast.LENGTH_SHORT).show();

finish();

}

}

/**

* Checks if all the permissions in the required permission array are already granted.

*

* @return Return true if all the permissions defined are already granted

*/

private boolean allPermissionsGranted() {

for (String permission : REQUIRED_PERMISSIONS) {

if (ContextCompat.checkSelfPermission(getApplicationContext(), permission) !=

PackageManager.PERMISSION_GRANTED) {

return false;

}

}

return true;

}Furthermore, we also want to load our custom .tflite model by adding the following code to our “onCreate” method:

public class MainActivity extends AppCompatActivity {

// New field

private ObjectDetector objectDetector;

@Override

protected void onCreate(Bundle savedInstanceState) {

// Loads a LocalModel from a custom .tflite file

LocalModel localModel = new LocalModel.Builder()

.setAssetFilePath("whatever_your_tflite_file_is_named.tflite")

.build();

CustomObjectDetectorOptions customObjectDetectorOptions =

new CustomObjectDetectorOptions.Builder(localModel)

.setDetectorMode(CustomObjectDetectorOptions.STREAM_MODE)

.enableClassification()

.setClassificationConfidenceThreshold(0.5f)

.setMaxPerObjectLabelCount(3)

.build();

objectDetector = ObjectDetection.getClient(customObjectDetectorOptions);

}

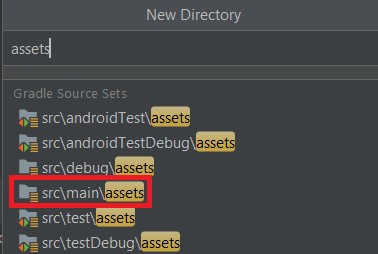

}The “setAssetFilePath” method looks into your root directory’s asset folder to see if there exists a .tflite model matching the provided string. This string therefore must match the name of whatever tflite model you wish to implement, be it one you have downloaded or one that you have created yourself. Therefore, we need to add an assets directory by right clicking the app folder, then “New”, then “Directory”. From there, we can search for “src/main/assets” and drop your .tflite file into the generated folder.

Moving on, we need to begin writing the “startCamera” method. The code for this method is here:

/**

* Starts the camera application.

*/

public void startCamera() {

ListenableFuture<ProcessCameraProvider> cameraProviderFuture =

ProcessCameraProvider.getInstance(this);

cameraProviderFuture.addListener(() -> {

ProcessCameraProvider cameraProvider;

// Camera provider is now guaranteed to be available

try {

cameraProvider = cameraProviderFuture.get();

bindPreviewAndAnalyzer(cameraProvider);

} catch (ExecutionException | InterruptedException e) {

e.printStackTrace();

}

}, executor);

}Most of the processes within this method are executed under the hood, per se, however the main part we should be aware of is the “ProcessCameraProvider,” which is an object that allows us to bind CameraX use cases to a “lifecycle”. In our case, the lifecycle would be our app, and opening and closing our app would tell the program to turn on or off those use cases. This lifecycle binding is achieved via the “bindPreviewAndAnalyzer” helper method, which is where we will see how the use cases are coded.

First, the preview use case is implemented:

/**

* Creates camera preview and image analyzer to bind to the app's lifecycle.

*

* @param cameraProvider a @NonNull camera provider object

*/

private void bindPreviewAndAnalyzer(@NonNull ProcessCameraProvider cameraProvider) {

// Set up the view finder use case to display camera preview

Preview preview = new Preview.Builder()

.setTargetResolution(new Size(1280, 720))

.build();

// Connect the preview use case to the previewView

preview.setSurfaceProvider(previewView.getSurfaceProvider());

// Choose the camera by requiring a lens facing

CameraSelector cameraSelector = new CameraSelector.Builder()

.requireLensFacing(CameraSelector.LENS_FACING_BACK)

.build();

}

Circling back to the PreviewView layer in our activity_main.xml we need to connect the “Preview” object to our previewView, telling our app to use our PreviewView object as the surface for the camera preview. The “CameraSelector” object indicates which camera lens to use since most Android devices has a front and rear camera. The VIA VAB-950’s firmware is programmed to have a back camera, so we set our CameraSelector to detect for a rear camera via “CameraSelector.LENS_FACING_BACK.”

If you see that your Preview seems stretched in some way, try using the “setTargetAspectRatio” method instead of “setTargetResolution”. This seems to be a common issue with CameraX on certain devices, it is likely the issue still exists even after using setTargetAspectRatio, but there is not much we can do to fix it. Google would have to update the API to address this issue. However, you can access the “Preview.Builder” documentation here.

Next, we set up the image analysis use case, which is where the core machine learning inference is happens, taking image inputs from the Preview and processing it through our custom tflite model:

/**

* Creates camera preview and image analyzer to bind to the app's lifecycle.

*

* @param cameraProvider a @NonNull camera provider object

*/

private void bindPreviewAndAnalyzer(@NonNull ProcessCameraProvider cameraProvider) {

....

// Creates an ImageAnalysis for analyzing the camera preview feed

ImageAnalysis imageAnalysis = new ImageAnalysis.Builder()

.setTargetResolution(new Size(1280, 720))

.setBackpressureStrategy(ImageAnalysis.STRATEGY_KEEP_ONLY_LATEST)

.build();

imageAnalysis.setAnalyzer(executor,

new ImageAnalysis.Analyzer() {

@Override

public void analyze(@NonNull ImageProxy imageProxy) {

@SuppressLint("UnsafeExperimentalUsageError") Image mediaImage =

imageProxy.getImage();

if (mediaImage != null) {

processImage(mediaImage, imageProxy)

.addOnCompleteListener(new OnCompleteListener<List<DetectedObject>>() {

@Override

public void onComplete(@NonNull Task<List<DetectedObject>> task) {

imageProxy.close();

}

});

}

}

});

}

/**

* Throws an InputImage into the ML Kit ObjectDetector for processing

*

* @param mediaImage the Image image converted from the ImageProxy image

* @param imageProxy the ImageProxy image from the camera preview

*/

private Task<List<DetectedObject>> processImage(Image mediaImage, ImageProxy imageProxy) {

InputImage image =

InputImage.fromMediaImage(mediaImage,

imageProxy.getImageInfo().getRotationDegrees());

return objectDetector.process(image)

.addOnFailureListener(new OnFailureListener() {

@Override

public void onFailure(@NonNull Exception e) {

String error = "Failed to process. Error: " + e.getMessage();

Log.e(TAG, error);

}

})

.addOnSuccessListener(new OnSuccessListener<List<DetectedObject>>() {

@Override

public void onSuccess(List<DetectedObject> results) {

String text = "";

float confidence = 0;

for (DetectedObject detectedObject : results) {

for (DetectedObject.Label label : detectedObject.getLabels()) {

text = label.getText();

confidence = label.getConfidence();

}

}

TextView textView = findViewById(R.id.resultText);

TextView confText = findViewById(R.id.confidence);

if (!text.equals("")) {

textView.setText(text);

confText.setText(String.format("Confidence = %f", confidence));

} else {

textView.setText("Detecting");

confText.setText("?");

}

}

});

}The image analysis use case is created by the “ImageAnalysis” and “ImageAnalyzer” object, where the analyze method from ImageAnalyzer takes in an “ImageProxy” object. This ImageProxy object is the image input we need to feed into our model, achieved through our processImage helper method. The ObjectDetector instantiated earlier processes the image input to produce a list of detected objects, which should include the classified object our model thinks is being shown to the camera. To output this result to a user, we simply obtain the two TextViews we created earlier and set them to be the label and confidence of the detected object.

Finally, we bind the preview and image analysis use case to the app life cycle. This step is especially crucial because otherwise the app wouldn’t be able to run the preview or image analysis! The final code for this is here:

/**

* Creates camera preview and image analyzer to bind to the app's lifecycle.

*

* @param cameraProvider a @NonNull camera provider object

*/

private void bindPreviewAndAnalyzer(@NonNull ProcessCameraProvider cameraProvider) {

....

// Unbind all previous use cases before binding new ones

cameraProvider.unbindAll();

// Attach use cases to our lifecycle owner, the app itself

cameraProvider.bindToLifecycle(this,

cameraSelector,

preview,

imageAnalysis);

}This project focuses on object classification, but the ML Kit offers a lot more machine learning functionalities that our simple camera app can build off. With the VAB-950, you can even look towards developing an embedded/Edge AI project if you choose to! The future for the VIA VAB-950 is bright, and we are very excited to see how far developers and companies alike can take it, and how far it can take them. For more information on hardware capabilities, software compatibilities and a complete datasheet, visit the VIA VAB-950 page.

Written by Daniel Swift, AI Development and Marketing Intern at VIA Technologies, Inc., and undergraduate Computer Science student at Aberystwyth University